Brand reputation and the impact of Google SERP selections

The influence of company navigational brand searches on consumer views and how Google handles them.

This investigation began with a discussion on Google’s selection of People Also Ask (PAA) results and the potential influence on brands a few weeks ago.

This investigation began with a discussion on Google’s selection of People Also Ask (PAA) results and the potential influence on brands a few weeks ago.

In this essay, I’ll present findings from a study of the emotion represented in PAA searches across Fortune 500 organizations in 2019. We use Nozzle ranking data in our study, which allows for quick and easy comparisons. Baidu’s open-source sentiment analysis engine, Senta, and the Google NLP Language. API is used to extract PAA results on a daily basis. We’ll discover:

- In terms of brand-related material, there are clear winners and losers.

- In Fortune 500 brand search results, a small number of domains have the lion’s share of visibility.

- Some businesses have relatively constant returns, while others have wildly varying results.

- Google enjoys informing users about the quality of a company.

- The question “Is this firm legit?” is frequently asked on top websites.

- The pandemic has had a significant impact on several PAA outcomes.

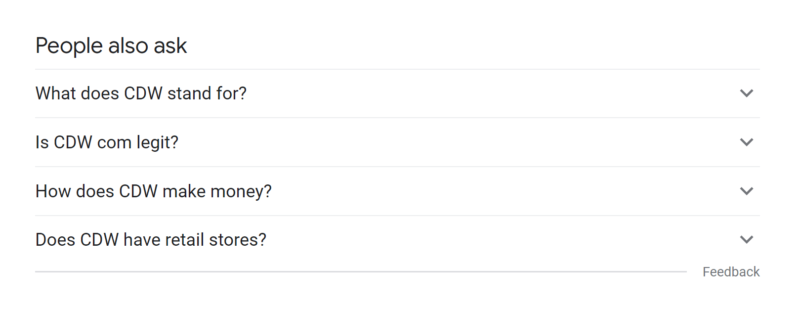

But first, for those who aren’t familiar with PAA findings, here’s how they appear:

CDW

For many businesses, PAA results appear at the top of Google and Bing search results for many or all of their brand searches. This is a result of the search term “CDW,” as shown in the image above. A substantial portion of individuals searching for “Is CDW.com legit?” may see “Is CDW.com legit?” every time they try to go to CDW’s website. Because the phrase “CDW” has a search volume of 135,000 US searches each month.

Some users may be interested in learning more about this. Take a look at this two-year-old Reddit post.

Is this, therefore, a good thing or a negative thing? For users who are unaware that CDW has been a reputable B2B technology retailer since 1984, I see the benefit. But I also see the problem from CDW’s perspective, in that this may be a sliver of doubt injected into their customers’ minds 135,000 times per month. It’s also worth noting that for those who have this question, it’s well-answered.

Processing the data

I’ll guide you through some of the data gathering and sentiment model information now that we’ve covered PAAs and how they might influence brand impression. Some individuals enjoy this kind of thing, and it helps them understand the facts. You may skip ahead if you only want to see the data.

To begin the process of deciphering corporate PAA findings, we needed to first compile a list of businesses. This Github repository offers Fortune 500 business lists dating back to 1955 in an easy-to-parse CSV format. To begin daily data gathering of US Google results, we imported the firm names into Nozzle (a rank tracking application we prefer for its granularity of information). Nozzle stores its data in BigQuery, which makes it simple to transform into a format that Google Data Studio can understand.

We were able to export all of the PAA results that showed for the company name searches in Google to a CSV for additional processing after tracking in Nozzle for a few days.

To add a sentiment score to the PAA questions, we used Baidu’s Senta open-source project. The Senta SKEP models are intriguing because they effectively train the language model specifically for the sentiment analysis task by using token masking and substitution in BERT-based models to focus primarily on tokens that convey sentiment information and/or aspect-sentiment pairings.

Aspect-based mode

We found that the aspect-based model tasks performed better than the sentence classification tasks after some testing. As an aspect, we used the company name. In essence, the aspect is the sentiment’s focal point. The aspect is often a component of a product or service that is the recipient of the sentiment in classic aspect-based sentiment analysis (e.g., My Macbook’s screen is too fuzzy). We also modified the model’s output to be in the range of -1 to 1 in order to compare with Google’s NLP API data. It’s either branded “positive” or “negative” by default.

The PAA questions were then processed using Google’s Language API. Which yielded a comparative score. We chose against leveraging Google’s ability to request entity-based sentiment because the correct identification of the companies as entities looked hit-or-miss. We also excluded magnitude from the output because we didn’t have an equivalent in Senta. The sentiment’s overall strength is measured in magnitude.

We wanted to publish our code so that others might try it out for themselves. They built a Google Colab notebook with instructions for installing Senta, downloading our dataset, and assigning a sentiment score to each row. You have to include code to access sentiment from the Google Language API, however, this will require API access and the submission of a service account JSON file.

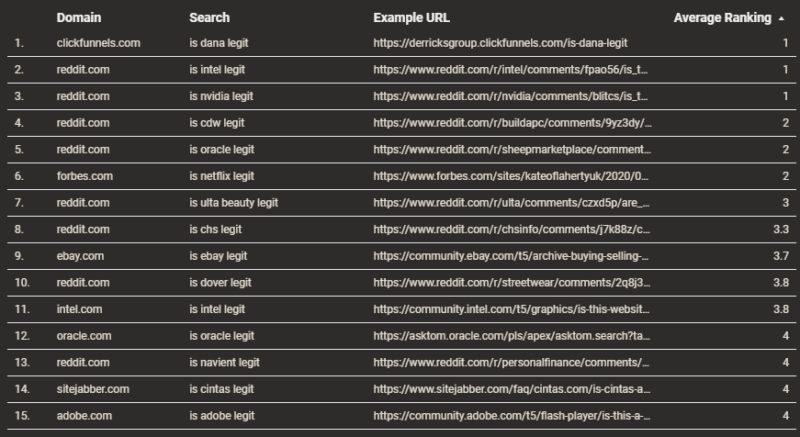

Finally, we set up rank tracking in Nozzle for all 500 firms to monitor the websites that rank for searches like “is legit?” and “is legal?” to see if we could figure out which domains were driving this material. We also used revenue change data from this year’s Fortune 500 companies as another layer against which to measure sentiment impact.

Analyzing the data

We’ve arrived at the most exciting section of the analysis. We created a Data Studio dashboard to allow readers to explore the information we gathered. We’ll discuss what piqued our interest in the next paragraphs. But we’re always interested in hearing from others here or on Twitter.

The dashboard is divided into ten separate views, with the raw data on the last two pages. Each view asks a question that it tries to answer with data.

Domain share of voice.

We’ve arrived at the most exciting section of the analysis. We created a Data Studio dashboard to allow readers to explore the information we gathered. We’ll discuss what piqued our interest in the next paragraphs. But we’re always interested in hearing from others here or on Twitter.

The dashboard is divided into ten separate views, with the raw data on the last two pages. Each view asks a question that it tries to answer with data.

Company PAA changes over time.

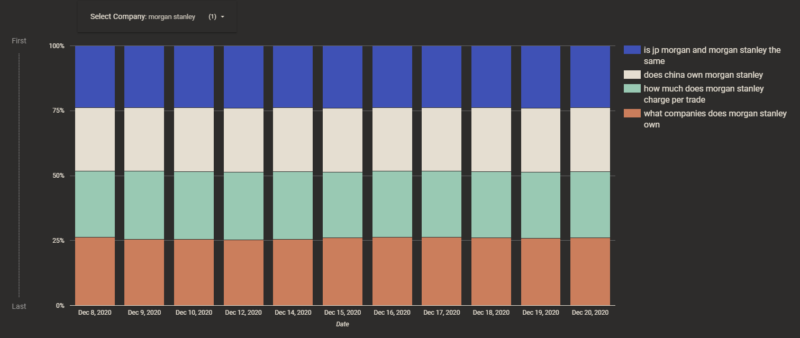

We examine how PAA findings vary on a daily basis in this perspective. This dataset began collecting data on December 8, 2020, therefore as we move towards 2021, this graph will get more fascinating. Here are a few of my favorite businesses.

Slow and steady wins the race at Morgan Stanley, as one might expect from a financial services firm. Every day since December 8, the same four results have been shown.

We can contrast this with Microsoft. Which is one of our most diverse PaaS, with the mix of PAAs, exhibited changing virtually every day.

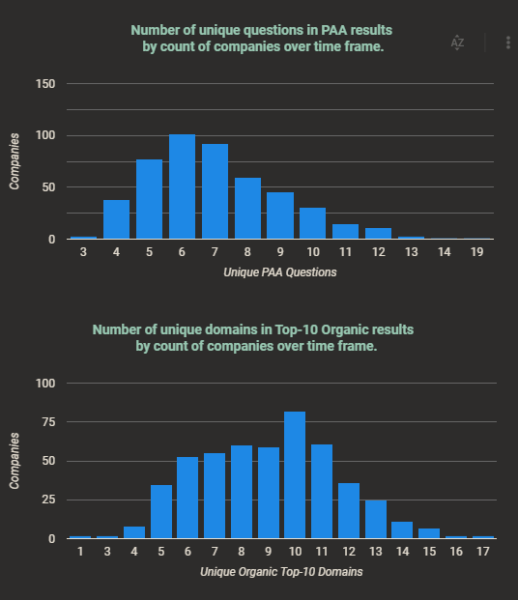

Over the roughly two weeks we collected data, the median number of unique PAA outcomes per organization was seven. During the same time period, the median number of distinct top-10 domains was exactly 10. The graph below depicts the distribution of one-of-a-kind results across all businesses.

The brand voice heroes here are Centerpoint Energy and FedEx, with their primary brand searches totally addressed by their own material from a single site.

PAA themes for companies.

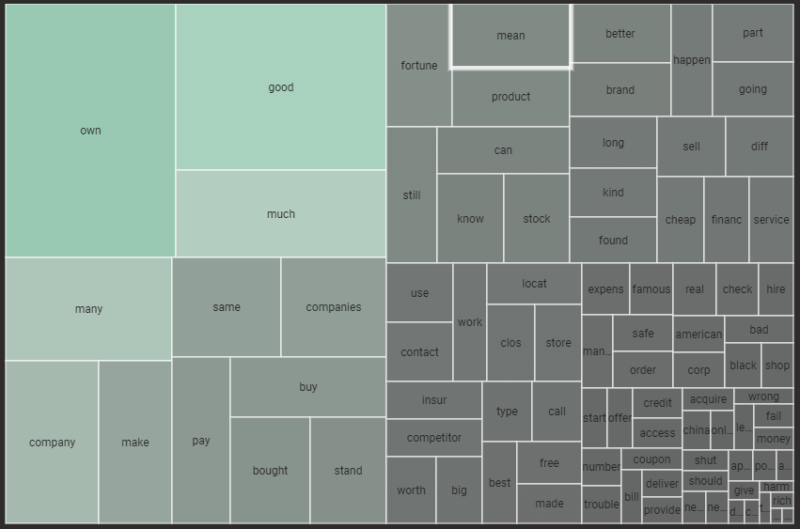

For me, this was one of the more intriguing sections. We looked through the PAA questions for the most regularly used portions of words to develop a view that may tell us what matters to individuals when it comes to businesses.

Google deduces that there is an interest in who owns the company in approximately half of the cases. Google also wants to reveal information on whether it is “good” for 37 percent of businesses.

The saddest cohorts were the “going” and “close” (“clos” is used here to denote words like “close,” “closed,” and “closure”) groupings. Which appeared to provide answers to the pandemic’s worries concerning popular companies’ solvency.

Despite the fact that Walmart’s profit increased by 123 percent in 2020. One of the most often asked questions is, “Is Walmart Really Closing?” In recent years, Walmart has shuttered a number of stores, many of which were smaller format Express stores. Walmart is not going out of business.

PAA results

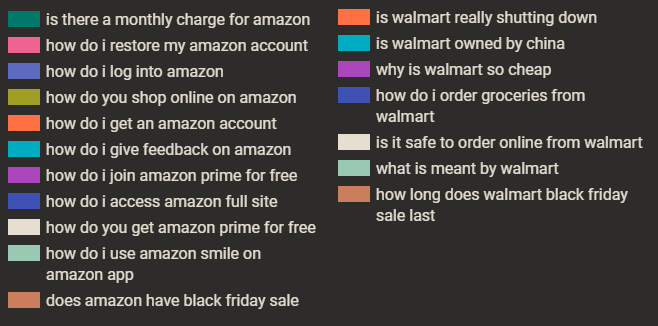

Looking at the PAA results from the perspective of online vs. brick and mortar companies. We can see that there is a distinct benefit to supporting one over the other when comparing Amazon and Walmart.

Obviously, the distinction between online and offline is blurring, as Walmart now sells online and Amazon now has physical locations. But this demonstrates how to question selection can generate real, tangible goodwill for competitors.

Also, after seeing “Is legit?” on several company searches, I decided to look into it. In fact, there were only eight results in the PAA results collected across all 500 companies that questioned the company’s legality:

- are bold precious metals legit

- is a legitimate

However, I found that it could have been a lot worse. What if you were the brand manager for one of these organizations with PAA questions in the “bad” cohort?

The PAA Question Exploration, the next view, was a lot of fun. We created a tool that allows you to construct out question phrases to get to specific company queries. After categorizing the PAA findings by theme and inquiry type. By clicking (1) in the image below, you are automatically prompted with the next possible refining options to click (2). Then click (3) to see the company’s question that corresponds to the pattern you selected.\

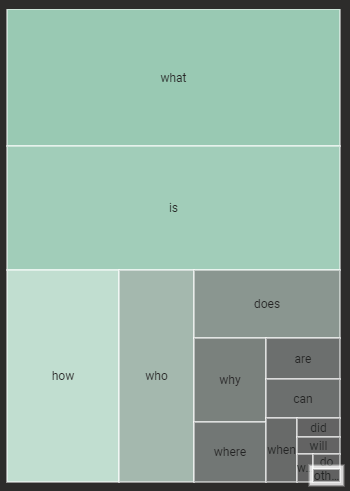

When it comes to queries and a little fun, “what” is a close second among the five Ws, appearing in approximately 29 percent of all PAAs gathered. The love child, “is,” follows in second, followed by “how” and “who.”

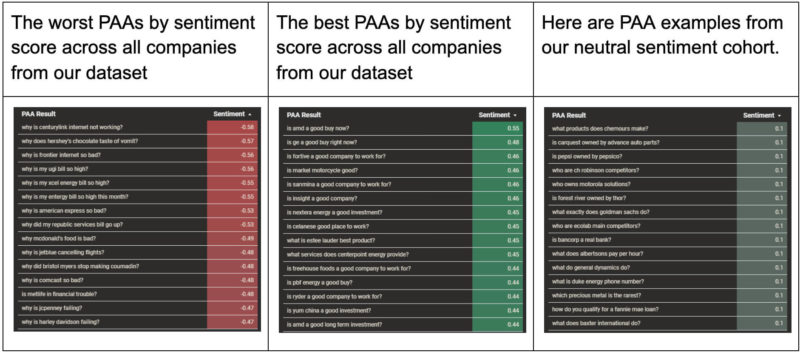

Company sentiment.

In the section on how we scored sentiment, we highlighted the Senta models from Baidu Research. Because we appreciated some features of each model’s findings. We chose an average of the two best performing Senta models and Google’s NLP API sentiment to offer the most balanced score. In this view, you can pick a certain model. The averaged view, though, is the default and the one we’ll use here.

These Pfizer findings demonstrate that Google recognizes the necessity of spotlighting current issues.

In terms of the balance of questions and usefulness of results, I think Carmax was my favorite out of all the companies in the sentiment view.

We looked at revenue change figures from this year’s Fortune 500 firms to determine. If there was a link between a company’s mood and its financial performance. While the origin of the figure below is disputed, the fact that organizations with higher average negative sentiment had lower revenue growth is intriguing.

2020 was a difficult year, which is likely tied to all of the questions in the findings regarding corporate financial solvency. But if Google is a mirror of customer questions and attitude, the results may just confirm this.

The legitimacy question.

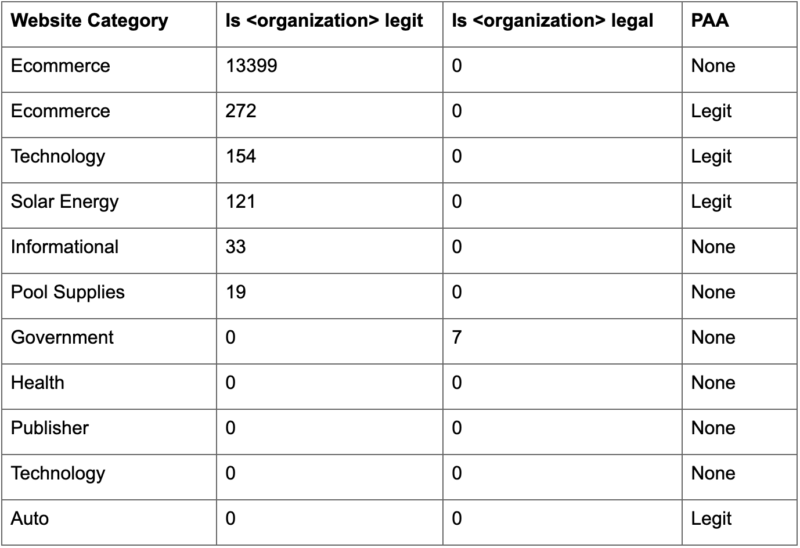

Our dashboard’s final view includes domains that answer the question “Is company> legit?” We’re keeping track of 1,000 searches: 500 for “Is company> legit” and 500 for “Is company> lawful.” Wikipedia is in the top ten results of about half of the 1,000 searches, and it is virtually entirely “legal” searches.

The results of the “legitimate” queries are mostly controlled by company review sites and job review sites. The focus on letting potential clients know whether the company is a real corporation appears to be misaligned with the career prominence. Perhaps this is a clue that this is a common job seeker aim. However, I can’t see a potential employee looking up “is the best buy legit?”

legit and legal

We can find a couple of interesting insights when we look at domains that target “legit” and “legal” inquiries, which means those words present in the URL slug.

On forums like Reddit, it appears that specific legitimacy questions are frequently discussed. In many circumstances, the result is relevant to the query but not to the search’s aim. In an Nvidia forum, for example, a user inquires about the legitimacy of a reportedly Nvidia-sponsored campaign.

Many “legal” questions are forwarded to the site’s own legal policy page. Isn’t that a good outcome?

Finally, Daniel Pati, SEO Lead at Cartridge Save, pointed out a site in our data that appears to be “going after” these “legitimate” searches by targeting specific corporate inquiries. I’m not going to name the company, but you can look it up if you want.

Though you’re going to list questions as if they were asked by real people. You should probably mix them up a little. We must have counted over 300 pages. All with identical titles except for the corporate name.

Takeaways

I hope you had as much fun reading it as I did putting it together. Derek Perkins, Patrick Stox, and others at Locomotive were instrumental in examining and critiquing the Data Studio dashboard.

Finally, I’m not sure how I feel about Google surfacing stuff in its navigational brand searches that raises questions about a company’s legality or authenticity. The table below was created using sites that we have access to through Google Search Console. The numbers values represent the phrase’s 12-month impressions (or similar phrases). Yes, there is a point in a company’s life cycle when that question is relevant to users. I’m not convinced a 30-year-old, well-established technology supplier should be treated the same way.

Takeaways

The odd thing that struck me during this procedure was that the PAA results appear to be unrelated to genuine user search intent and instead driven by internet content. “Why is coke so dangerous for you” receives 20 monthly searches and is surfaced on a brand that receives millions of monthly searches.“Why is apple so bad” is searched by 70 individuals each month. Yet it is likely surfaced for tens of millions of people. The phrase “Is Walmart Really Closing Down” is searched zero times per month and appears on a brand. That receives 55 million monthly searches. We added a feature to the Data Studio dashboard named “PAA Search Volume and Sentiment” to help us dig further. The vast majority of organizations had a combined PAA US search traffic of less than 500, as shown in the graph.

The important point here is that I believe there is a difference between providing a user’s query with available content. Whether positive or negative in sentiment, and actively proposing information that can substantively influence a user’s opinion of the issue being searched. This is especially true for navigational searches when the user is often merely looking for a way to go to a website rather than seeking feedback on a company.

The post Brand reputation and the impact of Google SERP selections appeared first on Soft Trending.

from Soft Trending https://ift.tt/2T7GFmc

via softtrending

Comments

Post a Comment